My last few posts were focused on Planning and Budgeting on the cloud, but today I'll move back to on-premise,,,but I promise it will be fun. So let's get to work. I have a simple business rule that pushes data from a BSO cube to an ASO cube with the following logic:

- User selects an Entity

- Export multiple data files for the selected Entity from the BSO cube

- Maxl to clear the specific region in the ASO cube

- Initialize load buffer

- Load data in buffer

- Load buffer to Essbase

That's it!! straightforward and simple....well, not really! not when you have 20 users triggering the same request at the same time (within seconds or minutes..). Why? because you are most likely to end up with Essbase error

ERROR - 1270095 - Specified load buffer resource usage [1] is above currently

available value [0].

This error means if one user is locking the databse loading data, another user cannot perform another parallel data load operation, not in a straightforward way anyways.

Here is an explanation of what can happen/not in the backend when triggered from Maxl

Ok, that's easy to control using load_buffers and splitting the resource usage? not really, since the request is user-triggered and not admin scheduled tasks that run on specific schedule (one after another with predetermined load buffers and resource usage per operation). So in order to get this to work, I need two things:

- For every step that may cause resource usage conflict (Step #2, 3 and 5) keep looping the request until it's completed successfully

- Random numbers for load buffers

So how can I do this? well, the answer is Shell scripting and a little bit of Groovy!

Ok, let's get down to business,,,

1- Business rule:

This rule will simply call a groovy script and pass three arguments (Entity, Version, and Environment sub var).

The first two variables are passed from Planning and are based on user selection and the third variable is a an environment substitution variable (static and never changes eg. devevelopment/UAT/production) helps moving artifacts between environments in a seamless fashion...

1- Business rule:

This rule will simply call a groovy script and pass three arguments (Entity, Version, and Environment sub var).

The first two variables are passed from Planning and are based on user selection and the third variable is a an environment substitution variable (static and never changes eg. devevelopment/UAT/production) helps moving artifacts between environments in a seamless fashion...

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | /*EPM Simplified - Omar Shubeilat*/

RUNJAVA com.hyperion.calcmgr.common.cdf.CDFLogger "clear";

RUNJAVA com.hyperion.calcmgr.common.cdf.CDFLogger "system";

RUNJAVA com.hyperion.calcmgr.common.cdf.CDFLogger "level" "FINEST";

RUNJAVA com.hyperion.calcmgr.common.groovy.cdf.GroovyCDF

"compile"

"-file"

&GroovyScript

"Ent,Env,Ver"

;

/* Force create the block*/

Set CreateNonMissingBlk On;

Fix("FY18","No_Account","Budget","Working",{Var_Entity})

"BegBalance"

(

@CalcMgrGroovyNumber(

&GroovyScript,

"",

@List(Ent,Env,Ver),

@List(@Name(@CurrMbr("Entity")),&Env,@Name(@CurrMbr("Version")))

);

)

EndFix

|

2- Groovy script

This script will call the main windows batch file, and wait for it to finish before it returns back to Planning. I need to return an integer because I'm using the function @CalcMgrGroovyNumber(), you can see I'm simply returning 1 and not an exit code from the process itself, this is how I decided to go about this in this post, so again feel free to take the logic and apply it however you may see fit.

1 2 3 4 5 6 7 8 | Integer RunPS(String Ent,String Env, String Ver) { def proc = "C:/${Env}/PS/RunPS.bat ${Ent} ${Env} ${Ver}".execute() proc.waitFor() proc.closeStreams() return 1 } RunPS(Ent,Env,Ver) |

I'm using the method waitFor, you can also use waitForOrKill() if you don't want this to run indefinitely,,, in either case, there has to be some kind of system-level monitoring process to highlight any stuck/hung processes in the background,,, in this post I'm going to show a simple way for users/admin to check what is happening in the back-end.

3 - Windows batch script

The script looks a bit creepy when you look at it, but it's really simple and not complicated, I'll break it down and explain each section. The main idea here is to execute the Maxl statement required for every step in the first diagram (scroll up :) ) and for steps 2 and 3 I need a loop that will exit when the step is completed. Why? I must clear data in the target region and I must initialize a load buffer to be able to finish the whole process successfully.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 | ECHO OFF REM BSO SET BSOAPP=EPMDEMO SET BSODB=BUDGET REM ASO SET ASOAPP=EPMDEMOR SET ASODB=REPORT REM ARGUMENTS %1 %2... SET ENTITY=%1 SET ENV=%2 SET VERSION=%3 REM DEFINE VARIABLES SET WORKDIR=C:\%ENV%\PS\ SET EXPORTDIR=%WORKDIR%Export\ SET TIMESTAMP=%DATE:/=-%_%TIME::=-% SET TIMESTAMP=%TIMESTAMP: =% REM INIT BUFFER ID SET /A BUFFERID=%RANDOM% * 100 / 20001 + 10000 REM EXPORT SCRIPT SET MXL_EXPORT_FILE_NAME=%WORKDIR%Maxl_Export_%ENTITY%_%TIMESTAMP%.mxl SET MXL_EXPORT_LOG_NAME=%WORKDIR%Maxlmxl_Export_%ENTITY%_%TIMESTAMP%.log SET EXPORT_DATA_FILE=%DATA_EXPORT_DIR%%ENTITY%_%TIMESTAMP%.txt ECHO login %HYP_ADMIN% %HYP_PWD% on %SERVER%; > %MXL_EXPORT_FILE_NAME% ECHO execute calculation ' >> %MXL_EXPORT_FILE_NAME% ECHO Set DataExportOptions >> %MXL_EXPORT_FILE_NAME% ECHO { >> %MXL_EXPORT_FILE_NAME% ECHO DataExportLevel Level0; >> %MXL_EXPORT_FILE_NAME% ECHO DataExportDynamicCalc Off; >> %MXL_EXPORT_FILE_NAME% ECHO DataExportColFormat On; >> %MXL_EXPORT_FILE_NAME% ECHO DataExportColHeader "Period"; >> %MXL_EXPORT_FILE_NAME% ECHO DataExportDimHeader Off; >> %MXL_EXPORT_FILE_NAME% ECHO DataExportOverwriteFile On; >> %MXL_EXPORT_FILE_NAME% ECHO }; >> %MXL_EXPORT_FILE_NAME% ECHO FixParallel(4,@Relative("%ENTITY%",0)) >> %MXL_EXPORT_FILE_NAME% ECHO Fix(%VERSION%, @LevMbrs("Account",0),"Budget") >> %MXL_EXPORT_FILE_NAME% ECHO DataExport "File" "," "%EXPORT_DATA_FILE%" ; >> %MXL_EXPORT_FILE_NAME% ECHO EndFix >> %MXL_EXPORT_FILE_NAME% ECHO EndFixParallel' on %BSOAPP%.%BSODB%; >> %MXL_EXPORT_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 0;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_EXPORT_FILE_NAME% essmsh %MXL_EXPORT_FILE_NAME% > %MXL_EXPORT_LOG_NAME% 2>&1 REM CLEAR SCRIPT SET MXL_CLR_FILE_NAME=%WORKDIR%Maxl_Clear_%ENTITY%_%TIMESTAMP%.mxl SET MXL_CLR_LOG_NAME=%WORKDIR%Maxlmxl_Clear_%ENTITY%_%TIMESTAMP%.log ECHO login %HYP_ADMIN% %HYP_PWD% on %SERVER%; > %MXL_CLR_FILE_NAME% ECHO alter database %ASOAPP%.%ASODB% clear data in region 'CrossJoin({[%ENTITY%]},{[%VERSION%]})'; >> %MXL_CLR_FILE_NAME% ECHO IfError 'Error'; >> %MXL_CLR_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 2;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_CLR_FILE_NAME% ECHO exit; >> %MXL_CLR_FILE_NAME% ECHO define label 'Error'; >> %MXL_CLR_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 1;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_CLR_FILE_NAME% ECHO exit 1; >> %MXL_CLR_FILE_NAME% :LOOPCLR essmsh %MXL_CLR_FILE_NAME% > %MXL_CLR_LOG_NAME% 2>&1 IF NOT %ERRORLEVEL% EQU 0 GOTO :LOOPCLR REM INITIALIZE BUFFER SET MXL_INIT_FILE_NAME=%WORKDIR%Maxl_InitBuffer_%ENTITY%_%TIMESTAMP%.mxl SET MXL_INIT_LOG_NAME=%WORKDIR%Maxlmxl_InitBuffer_%ENTITY%_%TIMESTAMP%.log ECHO login %HYP_ADMIN% %HYP_PWD% on %SERVER%; > %MXL_INIT_FILE_NAME% ECHO alter database %ASOAPP%.%ASODB% initialize load_buffer with buffer_id %BUFFERID%; >> %MXL_INIT_FILE_NAME% ECHO IfError 'Error'; >> %MXL_INIT_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 4;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_INIT_FILE_NAME% ECHO exit; >> %MXL_INIT_FILE_NAME% ECHO define label 'Error'; >> %MXL_INIT_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 3;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_INIT_FILE_NAME% ECHO exit 1; >> %MXL_INIT_FILE_NAME% :LOOPINIT essmsh %MXL_INIT_FILE_NAME% > %MXL_INIT_LOG_NAME% 2>&1 IF NOT %ERRORLEVEL% EQU 0 GOTO :LOOPINIT REM LOAD TO BUFFER SET MXL_LOAD_TO_BUFFER_FILE_NAME=%WORKDIR%Maxl_LoadToBuffer_%ENTITY%_%TIMESTAMP%.mxl SET MXL_LOAD_TO_BUFFER_LOG_NAME=%WORKDIR%Maxl_LoadtoBuffer_%ENTITY%_%TIMESTAMP%.log SET MXL_LOAD_TO_BUFFER_ERR_FILE_NAME==%WORKDIR%Maxl_LoadtoBuffer_Error_%ENTITY%_%TIMESTAMP%.log SET LOAD_DATA_FILE1=%DATA_EXPORT_DIR%%ENTITY%_%TIMESTAMP%_T1.txt SET LOAD_DATA_FILE2=%DATA_EXPORT_DIR%%ENTITY%_%TIMESTAMP%_T2.txt SET LOAD_DATA_FILE3=%DATA_EXPORT_DIR%%ENTITY%_%TIMESTAMP%_T3.txt SET LOAD_DATA_FILE4=%DATA_EXPORT_DIR%%ENTITY%_%TIMESTAMP%_T4.txt ECHO login %HYP_ADMIN% %HYP_PWD% on %SERVER%; > %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO import database %ASOAPP%.%ASODB% data from data_file '%LOAD_DATA_FILE1%' using server rules_file 'LR' to load_buffer with buffer_id %BUFFERID% on error write to '%MXL_LOAD_TO_BUFFER_ERR_FILE_NAME%'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO import database %ASOAPP%.%ASODB% data from data_file '%LOAD_DATA_FILE2%' using server rules_file 'LR' to load_buffer with buffer_id %BUFFERID% on error write to '%MXL_LOAD_TO_BUFFER_ERR_FILE_NAME%'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO import database %ASOAPP%.%ASODB% data from data_file '%LOAD_DATA_FILE3%' using server rules_file 'LR' to load_buffer with buffer_id %BUFFERID% on error write to '%MXL_LOAD_TO_BUFFER_ERR_FILE_NAME%'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO import database %ASOAPP%.%ASODB% data from data_file '%LOAD_DATA_FILE4%' using server rules_file 'LR' to load_buffer with buffer_id %BUFFERID% on error write to '%MXL_LOAD_TO_BUFFER_ERR_FILE_NAME%'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO IfError 'Error'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 6;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO exit; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO define label 'Error'; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 5;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% ECHO exit 1; >> %MXL_LOAD_TO_BUFFER_FILE_NAME% essmsh %MXL_LOAD_TO_BUFFER_FILE_NAME% > %MXL_LOAD_TO_BUFFER_LOG_NAME% 2>&1 REM LOAD FROM BUFFER SET MXL_LOAD_TO_ESSASE_FILE_NAME=%WORKDIR%Maxl_LoadToEssbase_%ENTITY%_%TIMESTAMP%.mxl SET MXL_LOAD_TO_ESSASE_LOG_NAME=%WORKDIR%Maxlmxl_LoadToEssbase_%ENTITY%_%TIMESTAMP%.log ECHO login %HYP_ADMIN% %HYP_PWD% on %SERVER%; > %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO import database %ASOAPP%.%ASODB% data from load_buffer with buffer_id %BUFFERID% ; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO IfError 'Error'; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 8;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO exit; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO define label 'Error'; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 7;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% ECHO exit 1; >> %MXL_LOAD_TO_ESSASE_FILE_NAME% essmsh %MXL_LOAD_TO_ESSASE_FILE_NAME% > %MXL_LOAD_TO_ESSASE_LOG_NAME% 2>&1 EXIT |

Let me explain what is happening up there...

Lines 3-7: Define my application and database variables

Lines 9-11: Arguments passed from the business rule

Lines 16-17: Generate a time stamp

Line 20: Generate random number for the load buffer

Lines 23-44: Executing the first step (export data from BSO)

Lines 47-61: Executing the second step (clear data in target region) - notice the loop at line 61 (this is to make sure clear statement was executed successfully)

Lines 65-79: Execute the third step (generate load buffer with the random buffer id)

Lines 82-106: Execute the fourth step (load data files to buffer)

Lines 109-120: Execute the fifth and final step (load buffer to Essbase)

Voila! that is it 😀

After running the business rule,,, a couple of files (maxls and logs) will be created for each step as shown here. (I'm not deleting the files but you can add a step to do that if you want)

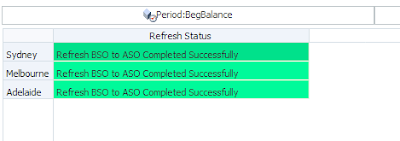

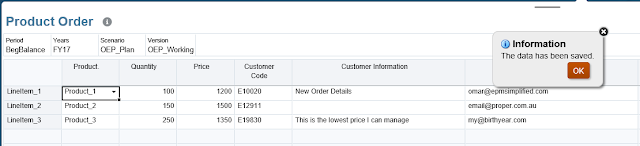

There is one more thing I added to give the users an idea of what is happening in the back end in case the business rule is taking more time than expected (remember I'm not using the method waitForOrKill() which can be handy or not depends on how you look at it). Back to Planning ,I have created a data form to show the refresh status for each entity that ran the business rule.

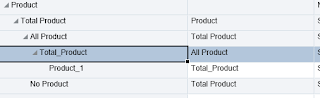

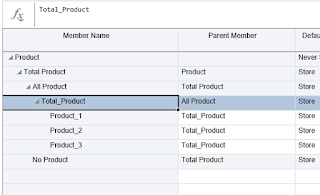

Here is the smart list:

And my data form has few colorful validations (green for ok steps and red for the rest).

So here is my data form,, you can see the refresh process is still looping at the first step because someone else has locked Essbase (either a data load or clear data operation) and once's it's finished/unlocked the step will finish executing and proceed to the next step.

Pretty simple really,, as for the status how I'm setting this up? if you go back to my batch script and check the lines (43, 53, 56, 71, 74, 100, 103, 115, and 118) here I'm setting the status after each step. The logic is simple, if there was an error then flag it. an example shown here for the clear data step.

ECHO IfError 'Error'; >> %MXL_CLR_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 2;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_CLR_FILE_NAME% ECHO exit; >> %MXL_CLR_FILE_NAME% ECHO define label 'Error'; >> %MXL_CLR_FILE_NAME% ECHO execute calculation 'Fix(%ENTITY%,"Working","Budget","FY19")"BegBalance"("Refresh Status" = 1;)EndFix' on %BSOAPP%.%BSODB%; >> %MXL_CLR_FILE_NAME% ECHO exit 1; >> %MXL_CLR_FILE_NAME%

Voila, we are done!

Hope this was helpful. 😀