It's been a while since I last wrote an article,

hopefully this will change in the coming months. This is going to be a quick

one about a cool (must have) functionality in Enterprise Data Management Cloud

Service (EDMCS) and that is Node Type Qualifiers and Subscriptions. I'm not

going to deep dive into the product and I'm assuming you have some functional

knowledge already.

According to Oracle documentation:

Quote

A node type qualifier is a prefix that you define

for a node type which allows for unique node type naming. You define node type

qualifiers for external applications that use unique naming for node types or

dimensions. For example, an application may use these prefixes for entities,

accounts, and cost centers: ENT_, ACCT_, and CC_.

Qualifiers are used by Oracle Enterprise Data

Management Cloud when you compare nodes, display viewpoints side by side,

and drag and drop from one viewpoint to another.

For example, if you have a cost center named 750 in

your general ledger application and you want to add it to your Oracle

Planning and Budgeting Cloud application, you can drag and drop the cost

center from the general ledger viewpoint to the Oracle Planning and

Budgeting Cloud viewpoint. With the node type qualifier defined and a node

type converter set up, the cost center in Oracle Planning and Budgeting

Cloud will be added as CC_750.

End Quote

In this post I will show to implement the following

scenario:

1. Manage Account segment metadata

in General Ledger viewpoint

2. Use subscriptions to link automatically

update PBCS Account viewpoint based on changes in General Ledger's Account

viewpoint

Let's get on with it, I have two applications setup

for this.

1. General Legder (my source

application)

2. PBCS (my target application)

I also have a Node Type Qualifier for my PBCS

Account dimension (Good old Essbase won't let you have duplicate member names).

As you can see in the screenshot below, I have specified A as my Account member

prefix qualifier. This means if I add an account value 10000 in ERP it would be

automatically created as A10000 in PBCS.

N

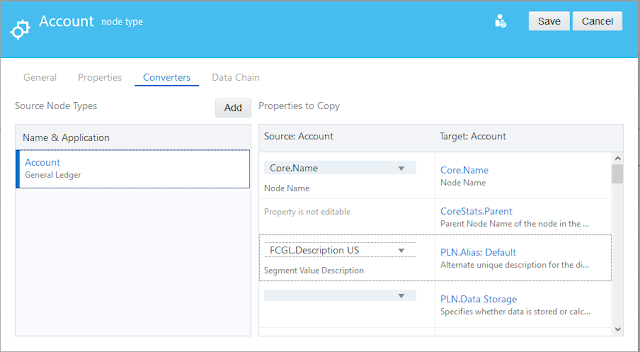

ext step is to create a Node Type Converter to

convert the General Ledger Account properties in PBCS viewpoint. This is needed

to capture the Alias of the member based on the description property in General

Ledger Account segment.

Next step is create a subscription to link PBCS

Account viewpoint to General Ledger's Account viewpoint and this will ensure

whatever changed you're doing in GL is captured in PBCS. I opted for Auto-Submit

subscription type which means the changes in the source viewpoint (General

Ledger) will be applied without manual/user intervention in the target

viewpoint (PBCS in this example).

Now I will show the current Account viewpoint in

both General Ledger and PBCS applications. I have created a simple Account

hierarchy and as you can see I have two child accounts under the Account parent

10000 (A10000 in PBCS)

GL

PBCS

I'll go and create a new request to add a new

Account member in GL view and see how it gets captured in PBCS. As shown in the

screenshot below, I created an Account 10003 with description Mobile Allowance

and will go ahead and submit the request.

After successfully submitting the request, EDMCS

will create a subscription request to update the change in PBCS. As shown

below, there is one Interactive request in General Ledger and another

Subscription request in PBCS. Both requests had 1 item and reported no issues.

So far so good.

Here is the request details showing one Account

node was created and prefixed with "A" in PBCS, and the Alias was

captured using the Node Type Converter. The cool thing worth mentioning is how

EDMCS managed to insert the member in the correct position even though the

parent acocunts in GL and PBCS have different names (10000 and A10000). That's

pretty awesome if you ask me!

Finally, I will check my PBCS Account viewpoint and

confirm the member node has been created an placed under the correct parent.

And that is it for today, hope you find this

useful. EDMCS is pretty awesome and I think what is coming in the future will

make this one of the best enterprise data management tools in the market.

Well done Oracle!