I've been having a bit of time on my hands lately thanks to the current covid lockdown we have in Australia and I got to spend more time setting up my Oracle Cloud Infrastructure(OCI) tenancy.

One of the topics I wanted to write about is a fully automated cloud-based backup solution for EPM Cloud in OCI. The solution I'm going to covert in this post is 100% in the cloud with no on-premise resources/dependencies at all! Pretty cool if you ask me, so let's get to it.

This diagram is a very high-level architectural diagram of the solution:

So, the main OCI components we are looking at here are as follows:

- Linux Virtual Machine

- Virtual Cloud Network

- Object Storage bucket for backup files

- Object Storage bucket for log files

- Logging services

- Service Connector Hub

- Topics, Subscriptions and Alarms

- EPM Automate and CLI

I will explain the OCI components used a little bit more as I go through the technical solution in the following sections. The idea in a nutshell is to use Linux machine to do the job for us, how would that process look like from start to end?

- Check if the backup file exists in Object Storage (this can be easily sipped with timestamps)

- Delete the file in Object Storage if it exists

- Login to EPM Cloud and Copy the Artifact Snapshot to Object Storage

- Send job status email notifications

- Delete old backup files in OCI to maintain n number of days of backups

One last thing before I show how to setup those steps and automate the solution from start to end, the following is a list of prerequisites that must are needed for the solution to work.

Create user to use with OCI related jobs

Create group and add the user to it

Generate Auth Token for the user in step#1 (EPM Automate copyToObjectStorage requires Auth token, NOT the OCI console login password)

Create IAM policy to grant the group created in step#2 the appropriate access to Object Storage (here is a snippet of my policy statements, ObjectStorage is the name of the group)

You don't need to assign OBJECT_DELETE permission so this one is optional. You just need the user to be able to create new files in Object Storage because the EPM Automate command requires a user with sufficient Object Storage privileges.

Now we are done with the prerequisites, let me start with the components of the overall solution:

Bash script

This is the main script I will be adding to the list of cron jobs.

Now let me highlight the bits that I think needs some explanation.

The next line retrieves the latest backup file stored in the bucket by using OCI list objects commands and jq which is a JSON processor and stores the filename in a variable to be used in the next section.

latestfile=`oci os object list --bucket-name $bucket | jq -r '.data[-1].name'`Example:

This section checks if the snapshot file we are about to copy to the object storage exists in OCI, if it exists, then delete the file before the backup process kicks off. This section can be easily replaced by an hourly timestamp if required, but for demo purposes I have decided to keep in this post just in case someone out there benefits from it.

#delete file in OCI if [[ $latestfile == $snapshotname ]] then echo "file exists $latestfile $snapshotname" oci os object delete --bucket-name $bucket --object-name $snapshotname --force else echo "file does not exist $latestfile $snapshotname" fi

Next is the "copyToObjectStorage" EPM Automate command, and the next block is to check if the copy was successful or not, and finally send email job status notifications.

$epmauto copyToObjectStorage "Artifact Snapshot" "$oci_user" "$oci_token" https://swiftobjectstorage.ap-sydney-1.oraclecloud.com/v1/NamespaceOCIDgoeshere/$bucket/$snapshotname &> $logmessage if [ $? -eq 0 ] then $epmauto sendMail "$email" "EPM Cloud automated backup completed successfully" Body="EPM Cloud automated backup completed successfully" else emailBody=$(tac $logmessage|grep -m 1 .) $epmauto sendMail "$email" "EPM Cloud automated backup completed with errors" Body="EPM Cloud automated backup completed with the following error message: $emailBody" fi

If you look carefully, you will notice I'm saving the output of the copyToObjectStorage EPM Automate command in a log file and the reason for that is I need a way to catch the error message and include it in the email body.

&> $logmessage

Since this is a REST based command, the first line of the output might not be the error message we are looking for. So I'm simply writing a new file every time the process is run and if the copy operation failed, then I'm fetching the last line in that log file and including it in the email body.

Here is a an example of a log file containing an error message and how to get the error message out of it.

The final block of the script is to only keep n number of backup files in the Object Storage bucket. To get the filename that must be deleted, I'm using jq to parse the JSON output of the "oci os object list", sort out based on creation date and fetch the first item in the array (i.e. the oldest item) to pass on to the cli delete command.

#Delete backups older than a week

i=`oci os object list -bn $bucket | jq '.data | length'`

for ((e=$i; e > $numberofdaytokeeps; e-- ))

do

x=`oci os object list -bn $bucket | jq -r '.data | map({"name" : .name, "date" : ."time-created"}) | sort_by(.date) | .[0].name'`

echo "Deleting the $e th file: $x"

oci os object delete --bucket-name $bucket --object-name $x --force

doneObject storage

I'm using two OS buckets, one to store the EPM backup files and one for the OCI logs.

I have enabled the write logs for the main bucket so that I can generate the logs in the next step.

Service connector

I'm using a service connector to automatically move the logs to Object Storage, I've configured this connector to move the logs every time there is a "put request" activity.

Alarms

The final component in the solution (it is really a nice to have but it is worth having because OCI is awesome I guess?) is an alarm definition that is used to trigger email notifications if there are no files copied to the storage bucket in the last 24 hours.

As you can see the alarm status is "ok" which means there has been a successful put request in the specified OS bucket.

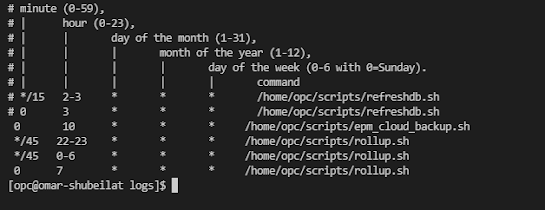

Cron jobs

The final piece of the puzzle is to automate this process by creating a crontab entry to run the bash script and that is it.

That is it! the solution is ready and live. I've been running this scripts for quite some time now and here is my OS bucket:

Sample log:

Notifications

Here is an email of a successful backup job:

Failed job:

There is also an alarm definition that kicks in if there are no files copied in the last 24 hours, to check if the alarm is in firing mode (i.e. something is wrong). For demo purposes, I'm going to change the alarm definition to trigger if less than 2 objects were copied in the last 24 hours so I can show what the alarm notification looks like and how it can be viewed in your OCI mobile application.

Here is a snapshot of the firing alarm, notice the alarm history is showing a metric of the actual number of files copied (one files) versus the value defined in the alarm in red dotted line (2).

All topic subscribers will also get an email notification informing them the alarm is in firing mode. Note the alarm type is OK_TO_FIRING

The cool thing about alarms is you can view them using OCI mobile application which is pretty neat! You can see the status of the alarm, what time it was fired and when was the last time its status was OK.

Now I will change the alarm definition to set to trigger if no objects were copied in the last 24 hours and will show how the alarm status changes and what notification is sent to the topic subscribers. Note the alarm type is FIRING_TO_OK

And the alarm notifications are gone on the mobile application.

That is it for this post, as you can imagine the combination of EPM Cloud and OCI is quite powerful and the sky is the limit when it comes to blending EPM Cloud and OCI resources to get the best out of Oracle cloud.

Thank you for reading.

No comments:

Post a Comment